Large scale data analysis with spaCy

Let’s look at how spaCy deals with shared vocabularly and string. To get started with spaCy, checkout the block Getting started with spaCy

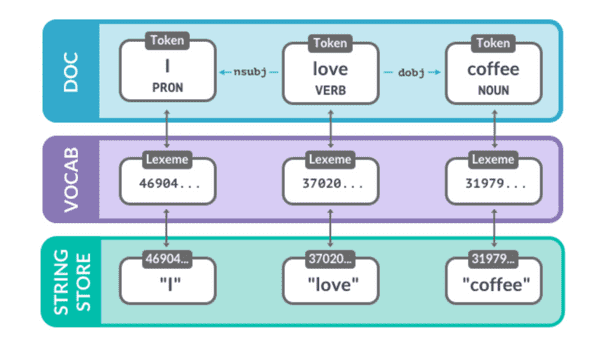

Shared vocab and string store

Vocab: Stores data (words, labeled schemes for tags and entities) shared across multiple documents- To save memory, spaCy encodes all strings to hash values to avoid storing the same value more than once

- Strings are stored once in the

StringStorevianlp.vocab.strings Stringstoreis a lookup table in both directions

coffee_hash = nlp.vocab.strings['coffee']

coffee_string = nlp.vocab.strings[coffee_hash]

NOTE: Internally, spaCy only communicates in hash IDs.

- Hashes can’t be reversed. If a hash ID is not in the shared vocab, an error is raised. That’s why we always need to provide the shared vocab.

- A doc object also exposes it’s vocabs via

doc.vocab.strings.

Lexemes

Lexemes and content-independent entries in the vocabulary. We can get a lexeme by looking up a string or a hash ID in the vocab.

doc = nlp("I love coffee")

lexeme = nlp.vocab("coffee")

# Print the lexical attributes

print(lexeme.text, lexeme.orth, lexeme.is_alpha)

OUTPUT

coffee 3197928453018144401 True

Lexemes contain content-independent information about a word:

- Word text:

lexeme.textandlexeme.orth(hash ID) - Lexical attributes like `lexeme.is_alpha

- Not context-dependent parts such as part-of-speech tags, dependencies or entity-labels

Data structures

The Doc object

The Doc is one of the central data structures in spaCy. It’s created automatically when we process a text with the nlp object.

Here’s how to create a Doc object manually:

# Create an nlp object

from spacy.lang.en import English

# Import the Doc class

from spacy.tokens import Doc

nlp = English()

# The words and spaces to create the Doc from

words = ['Hello', 'world', '!']

# list of Boolean values indicating whether the words should contain space

spaces = [True, False, False]

# Create a doc manually

doc = Doc(nlp.vocab, words=words, spaces=spaces)

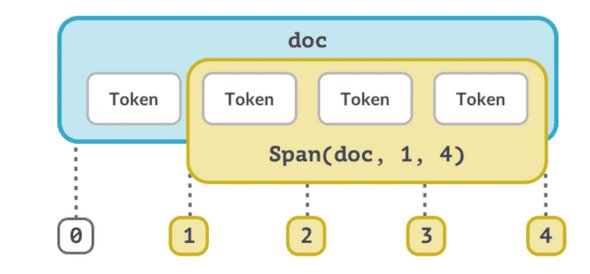

The Span object

A Span is a slice of a Doc consisting of one or more tokens

Here’s how to create a span object manually:

# Import the Doc and Span classes

from spacy.tokens import Doc, Span

# The words and spaces to create the doc from

words = ['Hello', 'world', '!']

spaces = [True, False, False]

# Create a Doc manually

doc = Doc(nlp.vocab, words=words, spaces=spaces)

# Create a span manually

span = Span(doc, 0, 2) # The end index is exclusive

# Create a span with an entity label

# Label names are all caps

span_with_label = Span(doc, 0, 2, label="GREETING")

# doc.ents are writable

# Add span to the doc.ents

doc.ents = [span_with_label]

In the above code, the label is accessible via span_with_label.label_.

NOTE: Creating spaCy’s objects manually and modifying the entities will come in handy when we’re writing our own information extraction pipeline.

Best practices

-

DocandSpanare very powerful and hold references and relationships of words and sentencesa. If your app needs to output strings, make sure to convert the doc as late as possible. Doing it early will result in loss of relationships between the tokens.

b. Use built-in token attributes wherever possible to keep things consistent.

- Don’t forget to pass in the share

vocab

Word vectors and similarity

Comparing semantic similarity

- spaCy can compare two objects and predict similarity

doc1.similarity(doc2),span1.similarity(span2)andtoken1.similarity(token2)- Similarity score lies between 0 and 1

- In order to measure similarity, we need a larger spaCy model that has word vectors included. For example,

en_core_web_mdoren_core_web_lgnot theen_core_web_sm

Examples:

import spacy

# Load a larger model with vectors

nlp = spacy.load('en_core_web_md')

# Compare two doc objects

doc1 = nlp("I like fast food")

doc2 = nlp("I like pizza")

print(doc1.similarity(doc2)) # 0.8627

# Compare two tokens

doc = nlp("I like pizza and pasta")

token1 = doc[2]

token2 = doc[4]

print(token1.similarity(token2)) # 0.7369

# Compare a document and a token

doc = nlp("I like pizza")

token = nlp("soap")[0]

print(doc.similarity(token)) # 0.3253

# Compare a span and a document

span = nlp("I like pizza and pasta")[2:5]

doc = nlp("McDonalds sells burgers")

print(span.similarity(doc)) # 0.6199

Word vectors in spaCy

- Similarity is determined using word vectors (mult-dimensional representation of meanings of words).

- The word vectors are generated using an algorithm like

Word2Vec - Vectors can be added to spaCy’s statistical models.

- By default, the similarity returned by spaCy is the cosine similarity between two vectors.

- Vectors for objects consisting of several tokens like the

Docand theSpanobjects default to the average of token vectors. That’s why we get more value out of shorter phrases with less irrelevant words rather than longer phrases with many irrelevant words.

import spacy

# Load a larger model with vectors

nlp = spacy.load('en_core_web_md')

doc = nlp('I have a banana')

# Access the vector via the token.vector attribute

print(doc[3].vector)

OUTPUT

[ 2.0228e-01 -7.6618e-02 3.7032e-01 3.2845e-02 -4.1957e-01 7.2069e-02

-3.7476e-01 5.7460e-02 -1.2401e-02 5.2949e-01 -5.2380e-01 -1.9771e-01

-3.4147e-01 5.3317e-01 -2.5331e-02 1.7380e-01 1.6772e-01 8.3984e-01

5.5107e-02 1.0547e-01 3.7872e-01 2.4275e-01 1.4745e-02 5.5951e-01

1.2521e-01 -6.7596e-01 3.5842e-01 -4.0028e-02 9.5949e-02 -5.0690e-01

-8.5318e-02 1.7980e-01 3.3867e-01 1.3230e-01 3.1021e-01 2.1878e-01

1.6853e-01 1.9874e-01 -5.7385e-01 -1.0649e-01 2.6669e-01 1.2838e-01

-1.2803e-01 -1.3284e-01 1.2657e-01 8.6723e-01 9.6721e-02 4.8306e-01

2.1271e-01 -5.4990e-02 -8.2425e-02 2.2408e-01 2.3975e-01 -6.2260e-02

6.2194e-01 -5.9900e-01 4.3201e-01 2.8143e-01 3.3842e-02 -4.8815e-01

-2.1359e-01 2.7401e-01 2.4095e-01 4.5950e-01 -1.8605e-01 -1.0497e+00

-9.7305e-02 -1.8908e-01 -7.0929e-01 4.0195e-01 -1.8768e-01 5.1687e-01

1.2520e-01 8.4150e-01 1.2097e-01 8.8239e-02 -2.9196e-02 1.2151e-03

5.6825e-02 -2.7421e-01 2.5564e-01 6.9793e-02 -2.2258e-01 -3.6006e-01

-2.2402e-01 -5.3699e-02 1.2022e+00 5.4535e-01 -5.7998e-01 1.0905e-01

4.2167e-01 2.0662e-01 1.2936e-01 -4.1457e-02 -6.6777e-01 4.0467e-01

-1.5218e-02 -2.7640e-01 -1.5611e-01 -7.9198e-02 4.0037e-02 -1.2944e-01

-2.4090e-04 -2.6785e-01 -3.8115e-01 -9.7245e-01 3.1726e-01 -4.3951e-01

4.1934e-01 1.8353e-01 -1.5260e-01 -1.0808e-01 -1.0358e+00 7.6217e-02

1.6519e-01 2.6526e-04 1.6616e-01 -1.5281e-01 1.8123e-01 7.0274e-01

5.7956e-03 5.1664e-02 -5.9745e-02 -2.7551e-01 -3.9049e-01 6.1132e-02

5.5430e-01 -8.7997e-02 -4.1681e-01 3.2826e-01 -5.2549e-01 -4.4288e-01

8.2183e-03 2.4486e-01 -2.2982e-01 -3.4981e-01 2.6894e-01 3.9166e-01

-4.1904e-01 1.6191e-01 -2.6263e+00 6.4134e-01 3.9743e-01 -1.2868e-01

-3.1946e-01 -2.5633e-01 -1.2220e-01 3.2275e-01 -7.9933e-02 -1.5348e-01

3.1505e-01 3.0591e-01 2.6012e-01 1.8553e-01 -2.4043e-01 4.2886e-02

4.0622e-01 -2.4256e-01 6.3870e-01 6.9983e-01 -1.4043e-01 2.5209e-01

4.8984e-01 -6.1067e-02 -3.6766e-01 -5.5089e-01 -3.8265e-01 -2.0843e-01

2.2832e-01 5.1218e-01 2.7868e-01 4.7652e-01 4.7951e-02 -3.4008e-01

-3.2873e-01 -4.1967e-01 -7.5499e-02 -3.8954e-01 -2.9622e-02 -3.4070e-01

2.2170e-01 -6.2856e-02 -5.1903e-01 -3.7774e-01 -4.3477e-03 -5.8301e-01

-8.7546e-02 -2.3929e-01 -2.4711e-01 -2.5887e-01 -2.9894e-01 1.3715e-01

2.9892e-02 3.6544e-02 -4.9665e-01 -1.8160e-01 5.2939e-01 2.1992e-01

-4.4514e-01 3.7798e-01 -5.7062e-01 -4.6946e-02 8.1806e-02 1.9279e-02

3.3246e-01 -1.4620e-01 1.7156e-01 3.9981e-01 3.6217e-01 1.2816e-01

3.1644e-01 3.7569e-01 -7.4690e-02 -4.8480e-02 -3.1401e-01 -1.9286e-01

-3.1294e-01 -1.7553e-02 -1.7514e-01 -2.7587e-02 -1.0000e+00 1.8387e-01

8.1434e-01 -1.8913e-01 5.0999e-01 -9.1960e-03 -1.9295e-03 2.8189e-01

2.7247e-02 4.3409e-01 -5.4967e-01 -9.7426e-02 -2.4540e-01 -1.7203e-01

-8.8650e-02 -3.0298e-01 -1.3591e-01 -2.7765e-01 3.1286e-03 2.0556e-01

-1.5772e-01 -5.2308e-01 -6.4701e-01 -3.7014e-01 6.9393e-02 1.1401e-01

2.7594e-01 -1.3875e-01 -2.7268e-01 6.6891e-01 -5.6454e-02 2.4017e-01

-2.6730e-01 2.9860e-01 1.0083e-01 5.5592e-01 3.2849e-01 7.6858e-02

1.5528e-01 2.5636e-01 -1.0772e-01 -1.2359e-01 1.1827e-01 -9.9029e-02

-3.4328e-01 1.1502e-01 -3.7808e-01 -3.9012e-02 -3.4593e-01 -1.9404e-01

-3.3580e-01 -6.2334e-02 2.8919e-01 2.8032e-01 -5.3741e-01 6.2794e-01

5.6955e-02 6.2147e-01 -2.5282e-01 4.1670e-01 -1.0108e-02 -2.5434e-01

4.0003e-01 4.2432e-01 2.2672e-01 1.7553e-01 2.3049e-01 2.8323e-01

1.3882e-01 3.1218e-03 1.7057e-01 3.6685e-01 2.5247e-03 -6.4009e-01

-2.9765e-01 7.8943e-01 3.3168e-01 -1.1966e+00 -4.7156e-02 5.3175e-01]

Applications of similarity

- Recommendation systems

- Flagging duplicates like posts on an online platform

NOTE : There is no objective definition of “similarity”. It depends on the context and what the application needs to do.

import spacy

nlp = spacy.load('en_core_web_md')

doc1 = nlp("I like cats")

doc2 = nlp("I hate cats")

print(doc1.similarity(doc2)) # 0.95

Combining models and rules

Combining statistical models with rule-based systems is one of the most powerful tools one can have in their set of NLP tools.

| Statistical Models | Rule based systems | |

|---|---|---|

| Uses cases | applications need to generalize based on few examples | dictionary with finite number of examples |

| Real world examples | product names, person names, subject/object relationships | countries of the world, cities, drug names, dog breeds |

| spaCy features | entity recognizer, dependency parser, part-of-speech tagger | tokenizer, Matcher, PhraseMatcher |

Adding statistical predictions

import spacy

from spacy.matcher import Matcher

nlp = spacy.load('en_core_web_md')

# Initialize with the shared vocab

matcher = Matcher(nlp.vocab)

matcher.add('DOG', None, [{'LOWER': 'golden'}, {'LOWER': 'retriever'}])

doc = nlp("I have a Golden Retriever")

for match_id, start, end in matcher(doc):

span = doc[start:end]

print("Matched span:", span.text) # Golden Retriever

# Span's root token decides the category of the phrase

print("Root token:", span.root.text) # Retriever

# Span's root head token is the syntactic "parent" that governs the phrase

print("Root head token:", span.root.head.text) # have

# Get the previous token and its POS tag

print("Previous token:", doc[start - 1].text, doc[start - 1].pos_) # a DET

OUTPUT

Matched span: Golden Retriever

Root token: Retriever

Root head token: have

Previous token: a DET

Efficient phrase matching

PhraseMatcher

- It is just like regular expressions or keyword search but in addition to that also gives access to the tokens.

- It takes

Docobjects as patterns. - It is more efficient and faster than the

Matcher - It is great for large volume of texts.

Example:

from spacy.matcher import PhraseMatcher

from spacy.lang.en import English

nlp = English()

matcher = PhraseMatcher(nlp.vocab)

# Pattern is a Doc object

pattern = nlp("Golden Retriever")

matcher.add("DOG", None, pattern)

doc = nlp("I have a Golden Retriever")

# Iterate over the matches

for match_id, start, end in matcher(doc):

# Get the matched span

span = doc[start:end]

print("Matched span:", span.text)

OUTPUT

Matched span: Golden Retriever

Now that we have the understanding of large-scale data analysis with spaCy , let’s further deep dive into what’s happening under the hood with the processing pipelines invloved.